MLOps models harbor immense potential, but to unlock their maximum value, understanding how to optimally deploy them is pivotal. The meticulous orchestration of a machine learning project’s lifecycle acts as a cornerstone for ensuring triumphant execution. As we build a machine learning system, our attention to the nuances of the machine learning project lifecycle allows for a holistic planning approach.

A representative deployment scenario might involve the use of an edge device situated within the smartphone manufacturing facility. This edge device is armed with inspection software that undertakes the task of capturing an image of each phone, scanning it for any scratches, and subsequently making a judgment on its quality. This method, often referred to as automated visual defect inspection, is a common practice in manufacturing facilities.

The inspection software operates by maneuvering a camera to seize an image of the smartphone as it emerges from the production line. Following this, an API call transmits this image to a prediction server. The prediction server’s role is to receive these API calls, scrutinize the image, and render a decision about the phone’s defectiveness. The ensuing prediction is then relayed back to the inspection software.

Ultimately, depending on this prediction, the inspection software can execute the relevant control decision, dictating whether the phone is fit to continue along the manufacturing line or should be flagged as defective.

MLOps infrastructure requirements

Over the past few years, machine learning models have been in the limelight, witnessing considerable advancements in the field. However, embedding these models into production necessitates more than just machine learning code. Typically, a machine learning system comprises a neural network or an alternative algorithm engineered to learn a function that correlates inputs to outputs.

A closer look at a machine learning system in a production environment reveals that the machine learning code, represented by the orange rectangle, makes up only a small fraction of the entire project’s codebase. Indeed, a substantial segment, potentially as little as 5–10% or even less, is devoted solely to the machine learning code.

To elucidate the information scientifically, the paraphrased version maintains a formal tone, recognizes the advancements in machine learning models, and underlines the relatively minute proportion of code that specifically pertains to machine learning within a deployed system.

The rift between the POC and production phases is often referred to as the “proof of concept to production gap.” A substantial part of this gap stems from the substantial effort necessitated to develop an extensive codebase beyond the rudimentary machine learning model code.

Besides the machine learning codes, a multitude of other elements must be assembled to enable a functional production deployment. These components frequently revolve around effective data management, embracing tasks such as data collection, data verification, and feature extraction.

Additionally, once the system is up and running, mechanisms for monitoring incoming data and executing data analysis must be set in place. As a result, various auxiliary components must be developed to facilitate a robust and functional production deployment.

Unfolding the MLOps project lifecycle: From conception to implementation

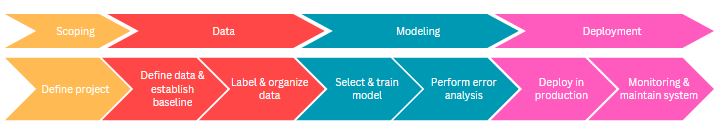

The Machine Learning Project Lifecycle is an orchestrated approach to conceptualizing and executing a machine learning project. This lifecycle weaves together a series of pivotal steps that dictate the project’s advancement. It all commences with the scoping phase, a stage that crystallizes the project’s objectives, pinpoints the specific application of machine learning, and identifies the variables of interest (X and Y).

Following the establishment of the project scope, data collection or acquisition springs into action. This process involves identifying the necessary data, setting a benchmark, and arranging the data via systematic labelling and organization.

Post data acquisition, the baton passes to the model training phase. Herein, model selection and training take place, accompanied by procedures of error analysis. Importantly, machine learning projects tend to be iterative in nature. During the error analysis stage, it’s not uncommon to circle back and tweak the model or even revert to the preceding phases to gather additional data, if the situation demands.

Prior to dispatching the system into the world, an indispensable step is executing a comprehensive error analysis, potentially comprising final audits and checks to validate the system’s performance and reliability for the intended application.

It is vital to recognize that the first deployment of the system merely signifies reaching the halfway milestone in optimizing performance. The remaining half of the insightful journey typically unfolds through the system’s active utilization and learning from real-world traffic.

Overcoming hurdles in MLOps model deployments

The first hurdle category is rooted in challenges associated with the fundamental machine-learning techniques and statistical facets of the model. This could mean grappling with issues surrounding model accuracy, overfitting, selection of suitable algorithms, and finessing hyperparameters. To triumphantly deploy a machine learning model, these challenges must be tackled head-on to ensure peak performance and reliable predictions.

The second category zooms into the software engineering aspects of deployment. This implicates considerations like scalability, system integration, compatibility with existing software infrastructure, and the capability to process voluminous data efficiently. For an effective deployment, it is paramount to address these software engineering issues to guarantee a robust and scalable solution.

Steering through concept drift and data drift in deployments

Concept drift and data drift often form substantial obstacles in numerous deployments. Concept drift is when the foundational concepts or relationships in the data evolve over time, while data drift pertains to alterations in the data distribution itself. This gives rise to a critical question: What transpires if the data used to train a deployed system undergoes transformation?

Taking the previous manufacturing illustration, a learning algorithm was programmed to spot scratches on smartphones under lighting conditions. But if the factory’s lighting conditions change, it signifies a shift in data distribution. Recognizing and comprehending how the data has evolved is fundamental in deciding whether the learning algorithm necessitates an update.

Data changes can transpire in various ways. Some alterations unfold gradually, like the evolution of the English language, where fresh vocabulary is introduced at a relatively unhurried pace. Conversely, abrupt changes can also crop up, triggering sudden shocks to the system.

Conquering software engineering obstacles in system implementation with MLOps

eyond grappling with data-related transformations, a triumphant system deployment involves astutely handling software engineering quandaries. When shaping a prediction service that receives queries (x) and produces respective predictions (y), a plethora of design choices ascend in the construction of the software element. To guide decision-making amid the complex labyrinth of software engineering challenges, an invaluable checklist of inquiries can be instrumental.

A pivotal choice in crafting your application orbits the determination of the necessity for real-time predictions versus batch predictions. Take, for instance, a speech recognition system where an immediate response is mandated within a fleeting half-second; in such a scenario, real-time predictions are non-negotiable.

Conversely, envisage systems developed for hospitals, where patient records like electronic health records undergo nocturnal batch processing to sieve relevant information. Here, the execution of the analysis as a nightly batch process suffices.

The demand for either real-time or batch predictions significantly steers the implementation strategy, dictating if the software should be engineered for lightning-fast responses within milliseconds or if it can undertake comprehensive computations throughout the night.

Unlocking MLOps deployment potential: Roadmaps to triumph

In the realm of deploying a learning algorithm, a reckless “switch it on and cross your fingers” tactic is generally frowned upon due to the inherent risks. We can sketch a demarcation line between the initial deployment and the subsequent maintenance and update deployments. Let’s embark on a deeper exploration of these distinctions.

The initial deployment scenario materializes when a fresh product or capability, previously nonexistent, is introduced. Imagine the case of presenting an innovative speech recognition service. In such circumstances, a popular design pattern involves directing a moderate volume of traffic towards the service initially and progressively amplifying it over time. This steady ascension strategy enables meticulous monitoring and assessment of the system’s performance, guaranteeing a seamless glide towards full-scale deployment.

Another common deployment instance arises when a task previously managed by humans is now aimed to be automated or aided by a learning algorithm. For example, in an industrial environment, if human inspectors were tasked with scrutinizing smartphone scratches, the incorporation of a learning algorithm for task automation or assistance becomes viable. The historical human engagement in the task offers additional leeway in determining the deployment strategy.

By embracing suitable deployment patterns tailored to each situation, the perils and challenges associated with system deployment can be efficiently curtailed.

Shadow mode deployment: A parallel learning algorithm approach

Consider the scenario of visual inspection, where traditionally human inspectors examine smartphones for defects, specifically scratches. The objective now is to introduce a learning algorithm to automate and assist this inspection process. When shifting from human-powered inspection to algorithmic assistance, a deployment pattern often enlisted is known as shadow mode deployment.

In the shadow mode deployment, the learning algorithm operates alongside the human inspector in harmony. During this preliminary phase, the algorithm’s outputs are not engaged for decision-making within the industrial landscape. Instead, human judgment remains the chief arbitrator.

For instance, if both the human inspector and the learning algorithm agree on a smartphone being defect-free or possessing a substantial scratch defect, their judgments are aligned. However, discrepancies might arise, such as when the human inspector identifies a minor scratch defect, while the learning algorithm wrongly categorizes it as permissible.

Shadow mode deployment’s genius lies in its capacity to collect data on the learning algorithm’s performance and pit it against human judgment. By functioning in parallel with human inspectors without directly affecting decisions, this deployment strategy allows for a comprehensive evaluation and validation of the learning algorithm’s effectiveness.

Utilizing shadow mode deployment proves to be highly efficient in assessing learning algorithm performance before it’s endowed with decision-making authority.

Canary deployment: Gradual introduction to algorithmic decision-making

Canary Deployment, a frequently adopted deployment pattern, serves as a conduit to introduce a learning algorithm to real-world decision-making situations. This method involves the initial rollout of the algorithm to a limited segment of the overall traffic, typically around 5% or even less.

By restricting the algorithm’s influence to a minor fraction of the traffic, any potential errors made by the algorithm would only affect this subset. This structure provides a window to closely supervise the system’s performance and gradually escalate the percentage of traffic routed towards the algorithm as confidence in its capabilities burgeons.

Degree of automation in deployment for Human-AI collaboration

When contemplating system deployment, it’s beneficial to diverge from a binary perspective of deploy or not deploy and instead concentrate on determining the suitable automation level. MLOps tools can assist in implementing deployment patterns, or alternatively, they can be manually implemented. For instance, in the case of visual inspection of smartphones, varying degrees of automation can be considered.

At one end of the spectrum is a purely human-driven system with zero automation. A slightly automated approach involves running the system in shadow mode, where learning algorithms generate predictions but do not directly influence factory operations. Increasing the level of automation, an AI-assisted approach could offer guidance to human inspectors by highlighting regions of interest in images. User interface design plays a crucial role in facilitating human assistance while achieving a higher degree of automation.

Partial automation represents a higher level of automation, wherein the learning algorithm’s decision-making is relied upon unless it is uncertain. In cases of uncertainty, the decision is deferred to a human inspector. This approach, known as partial automation, proves beneficial when the learning algorithm’s performance is not yet sufficient for complete automation.

Beyond partial automation lies full automation, where the learning algorithm takes the helm for every decision without human intervention. Deployment applications often span a spectrum, gradually transitioning from relying solely on human decisions to relying solely on AI system decisions.

In domains such as consumer internet applications (e.g., web search engines, online speech recognition systems), full automation is mandatory due to scale and feasibility. However, in contexts like factory inspections, human-in-the-loop deployments are often preferred, striving to find the optimal design point between human and AI involvement.